Sonoma Alpha Sky & Dusk Models: Real-World Performance in Cline

Two mysterious AI models appeared on Vercel AI Gateway and OpenRouter this week: Sonoma Sky Alpha and Sonoma Dusk Alpha. With 2 million token context windows and free alpha access, they've generated significant buzz in the AI community.

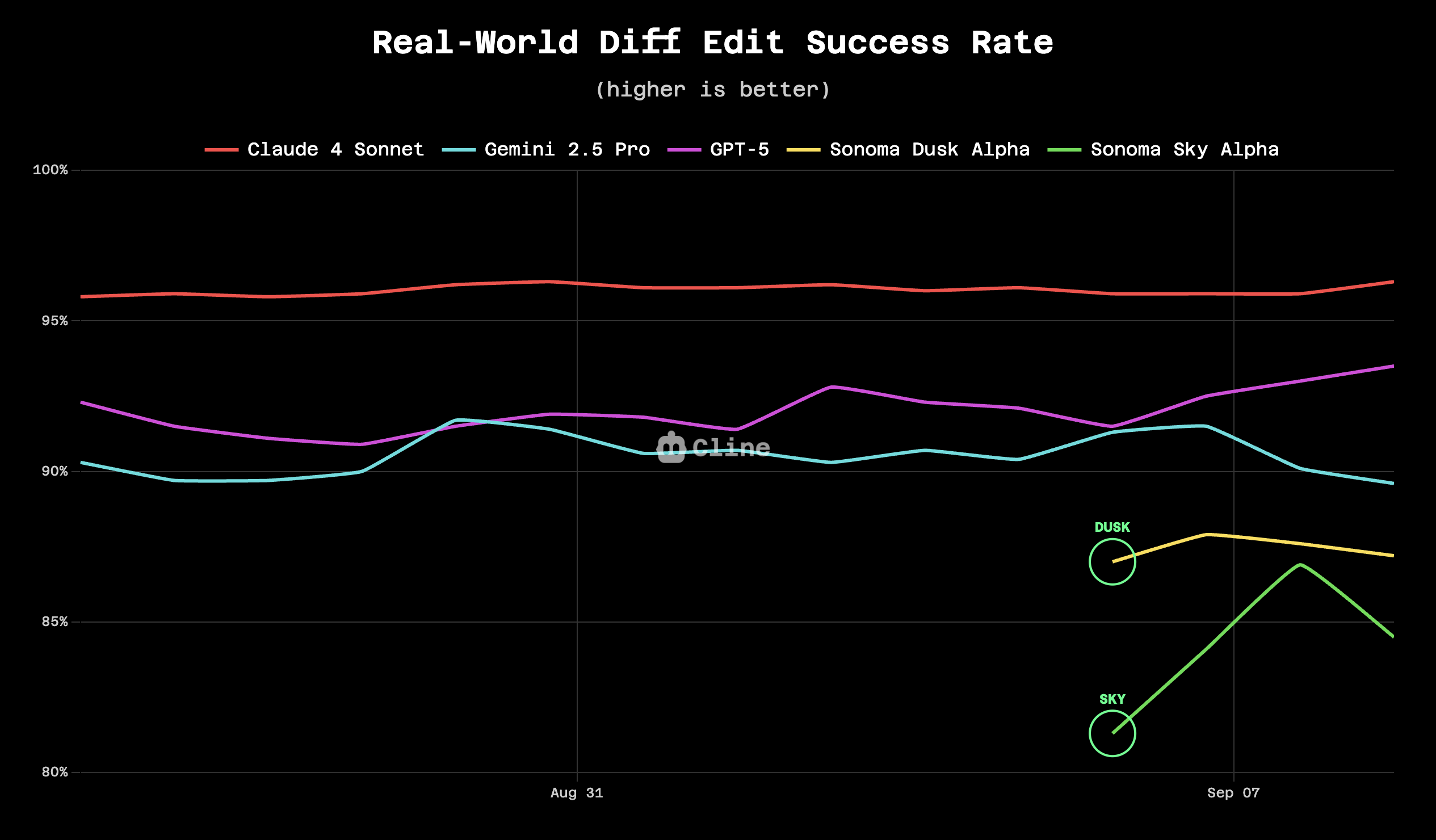

We've been tracking their performance in Cline across thousands of real coding tasks. Here's what the data shows.

The Models

Sky - Positioned as the more capable reasoning model

Dusk - Designed for faster inference

After analyzing thousands of diff edit operations in Cline over the past two weeks:

- Claude 4 Sonnet: 96% success rate

- GPT-5: 92% success rate

- Gemini 2.5 Pro: 90% success rate

- Dusk: 87% success rate

- Sky: 84% success rate

What this means

The 2 million context window is impressive on paper, but reliability matters more for production coding work. While both Sonoma models are notably fast, they struggle with accuracy compared to established models.

Our Discord community has reported mixed experiences - some users appreciate the speed, while others have encountered hallucinations and tool calling issues.

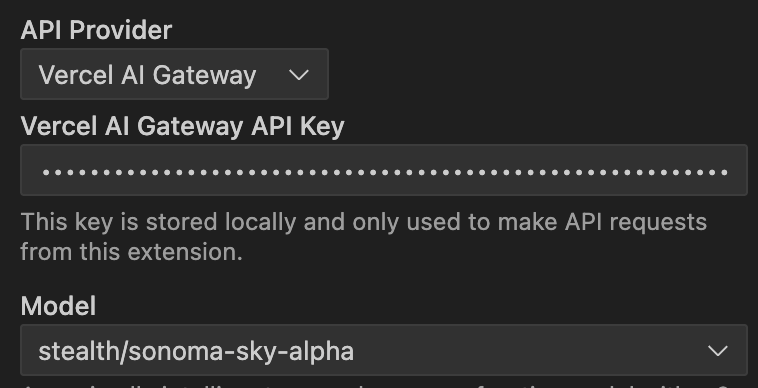

How to try Sky & Dusk

Both models are available free during the alpha period through the Vercel AI Gateway and OpenRouter.

Given the performance gaps, we recommend keeping Claude or another reliable model as your primary choice while experimenting with the Sonoma models for non-critical tasks.

What does this mean for you?

Sonoma Alpha models represent an interesting experiment in AI model deployment – mysterious origins, impressive specs, free access. But when it comes to real coding work, established models still lead on reliability.

The 2M context window shows promise for future applications, but current performance suggests these models need more development before they're ready for production use.

Data based on Cline usage from August 26 - September 9, 2025. Sonoma models appeared September 6th. Performance may vary based on task complexity and use case.