GPT-5-Codex Now in Cline

GPT-5-Codex is now available in Cline. It's OpenAI's version of GPT-5 optimized specifically for coding agents, not general conversation.

The basics

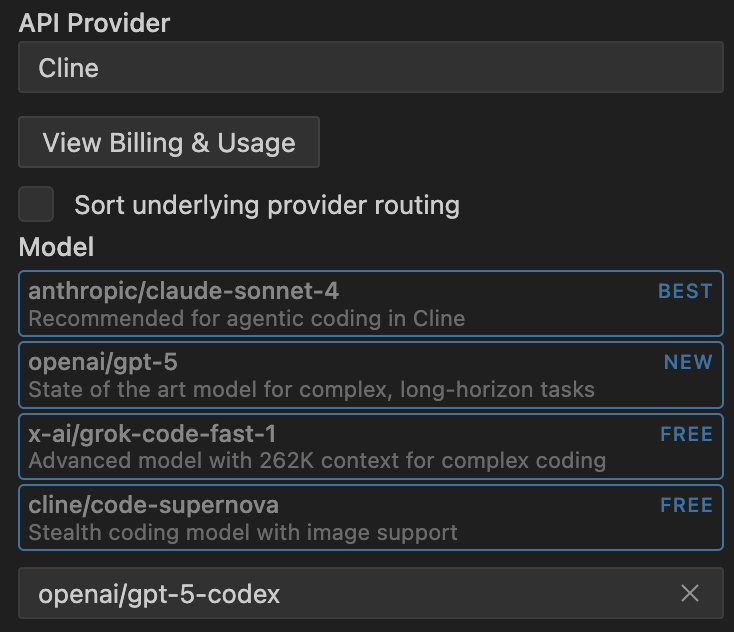

GPT-5-Codex brings a 400K context window at $1.25 per million input tokens and $10 per million output tokens, matching GPT-5's pricing. It's available through the Cline provider in your model dropdown.

What makes it different from GPT-5

Three key differences set GPT-5-Codex apart:

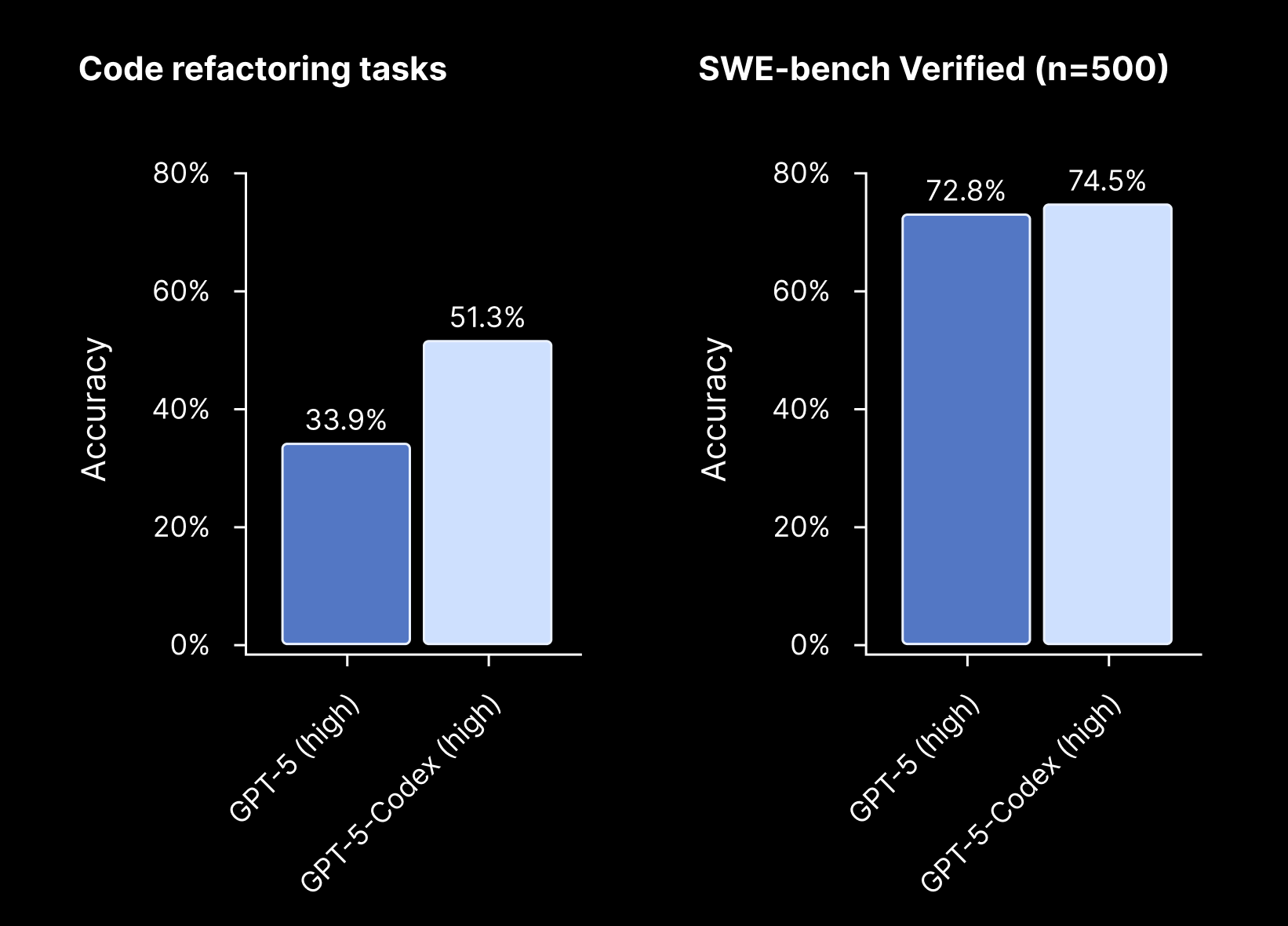

Adaptive reasoning. The model dynamically adjusts its thinking based on task complexity. For simple requests, it uses 93.7% fewer tokens than GPT-5. For complex refactoring or debugging, it thinks twice as long, iterating through implementations and test failures until it delivers working code.

Built for coding agents. While GPT-5 is a general-purpose model, Codex was trained on real-world engineering tasks: building projects from scratch, debugging, large-scale refactoring, and code review. It's more steerable, follows your instructions closely, and produces cleaner code without being told.

And among other things, it's much more terse than the Claude family of models.

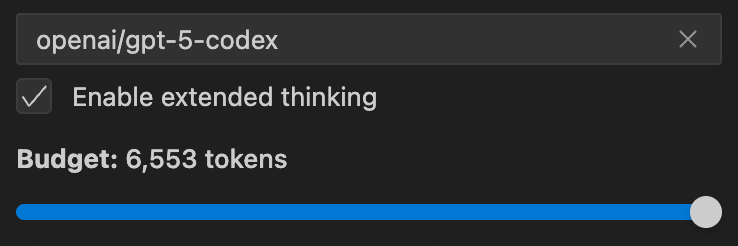

Variable thinking. This is the feature that works perfectly with Cline's thinking slider. Max it out, and the model will think exactly as much as needed; no more, no less. It adapts reasoning effort dynamically rather than thinking for a fixed duration.

Using it in Cline

Select gpt-5-codex from the model dropdown in Cline's settings from your chosen provider. Expect a much more terse version of Cline than you might be getting with Claude Sonnet-4 ("YOU'RE ABSOLUTELY RIGHT").

Ready to try GPT-5-Codex? Update Cline and share your experiences on Reddit or Discord.

-Nick