Cline v3.25: The Coding Agent Built for Hard Problems

We're very excited about this release. A lot of our users have turned to Cline because because it’s simply better at tackling complex problems. When you give frontier models the context they need, the results speak for themselves, and with Cline, developers can tap their full potential.

In v3.25, we've set out to make Cline even more capable on the longest, most complex coding tasks that trip up most agents for two key reasons:

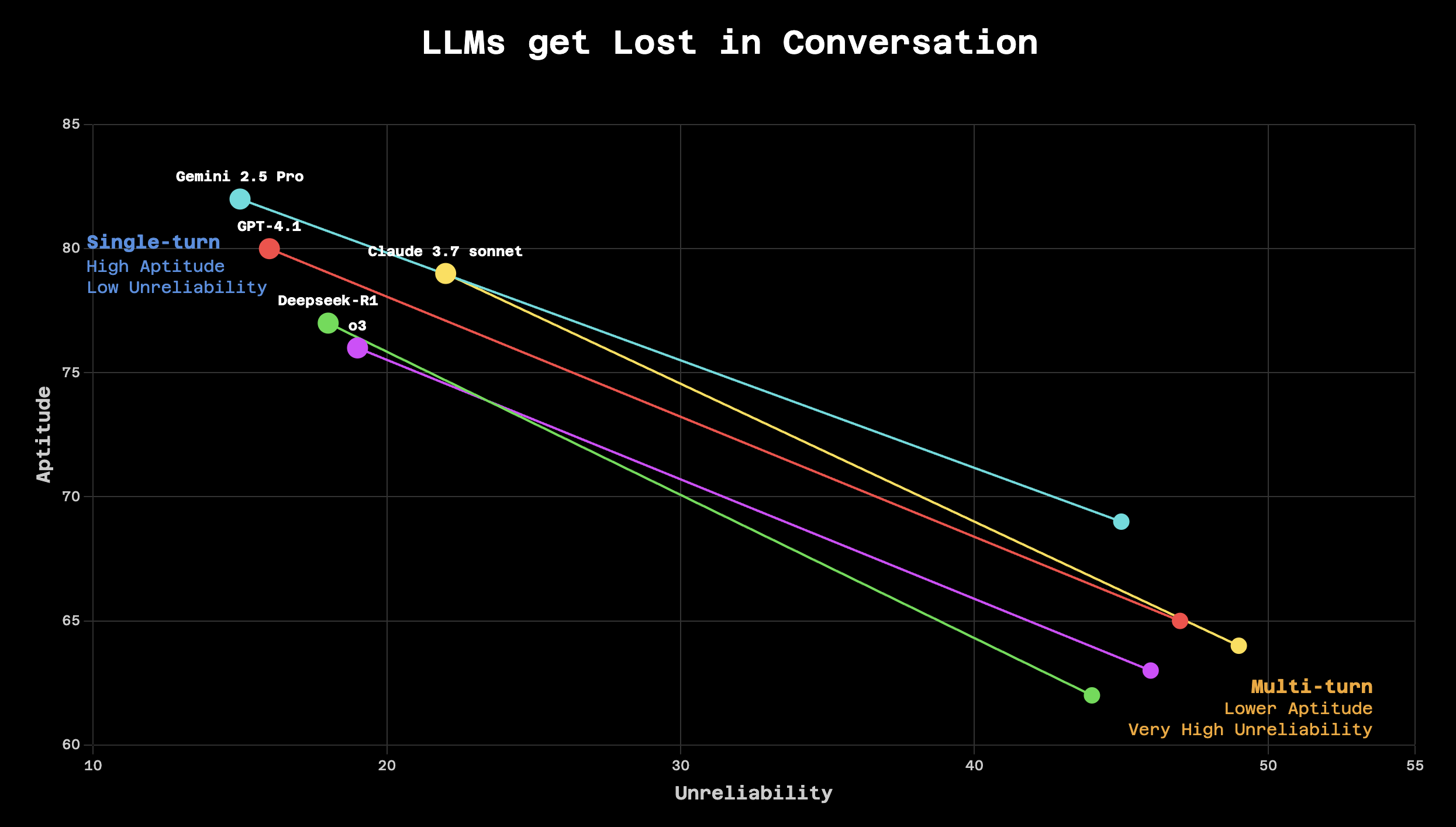

- LLMs degrade in performance as context size increases. Researchers call this the "lost in the middle" phenomenon, where context in the middle of conversations gets lost.

- Multi-turn agents compound this effect. If your model is 95% accurate on turn one, it might be 92% accurate on turn two as context accumulates; by turn ten, you're down to 70%; by turn twenty, your agent is essentially hallucinating, having lost the thread of what it was originally trying to accomplish.

This compound effect happens because each turn adds more context pollution:

- the model's own generated explanations and assumptions

- error corrections and clarifications

- tool outputs and file contents

- previous attempts that didn't quite work

It's not just that the context window fills up, it's that the signal-to-noise ratio degrades with each interaction. Your original task gets buried deeper in the middle of an increasingly noisy context.

How we optimize for turn #50

We've recognized that simply waiting for bigger context windows wouldn't solve this problem. Anthropic just released Claude 4 Sonnet with a 1 million token context window, which is incredible, but a million tokens of polluted context is still polluted context. The degradation curve doesn't disappear; it just gets stretched out.

So we built three interlocking systems that work together to keep Cline on track, regardless of task complexity or length. Here's how you use it:

1. Deep Planning ensures you start with perfect context

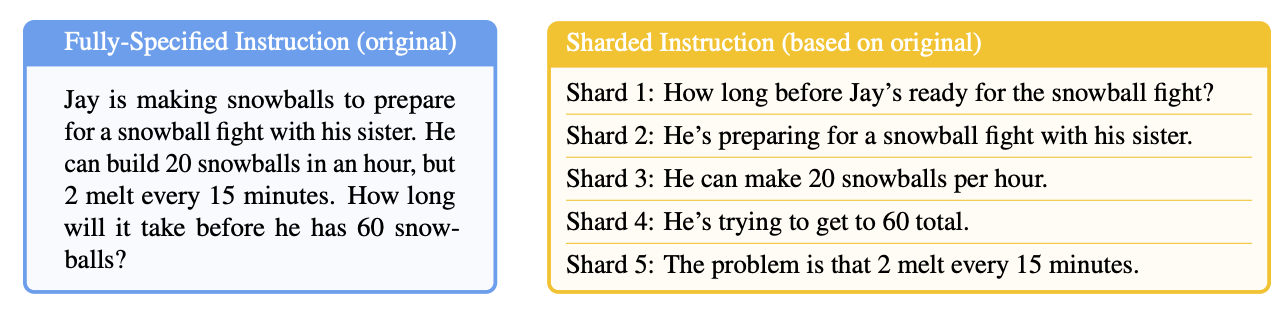

When you run Cline’s /deep-planning command with your task, you kick off a four-step planning process:

- Cline becomes non-verbose and investigates your entire codebase. It greps your codebase, reads files, analyzes patterns, understands dependencies, and builds a mental model of your project.

- Only after this investigation does Cline engage with you, asking targeted questions to clarify ambiguities.

- Then it produces a comprehensive

implementation_plan.mdit saves in your project root. - Last, Cline starts a new task loaded with a perfectly planned prompt which includes @'ing key files and the

implementation_plan.mdto be included in context for a fresh agent to take over.

At this point, you and Cline have crafted the perfect plan to hand off to the next agent.

/deep-planning

Here's why this improves performance: when Cline starts the actual implementation with fresh context, it has a perfect, distilled plan to work from. No pollution from exploration, no accumulated assumptions, just pure implementation intent.

By conducting all exploration and clarification during the planning phase – with its inevitable back-and-forth, dead ends, and accumulated assumptions – then distilling everything into a single, comprehensive plan, we eliminate the context pollution that degrades performance at turn twenty. The implementation starts fresh with just the refined plan as context, achieving the 95% optimal performance that comes from consolidated instructions rather than suffering the 39% degradation of multi-turn dialogue (see below).

We improve performance during Act mode by creating a clean handoff such that Cline only has high-value tokens in context.

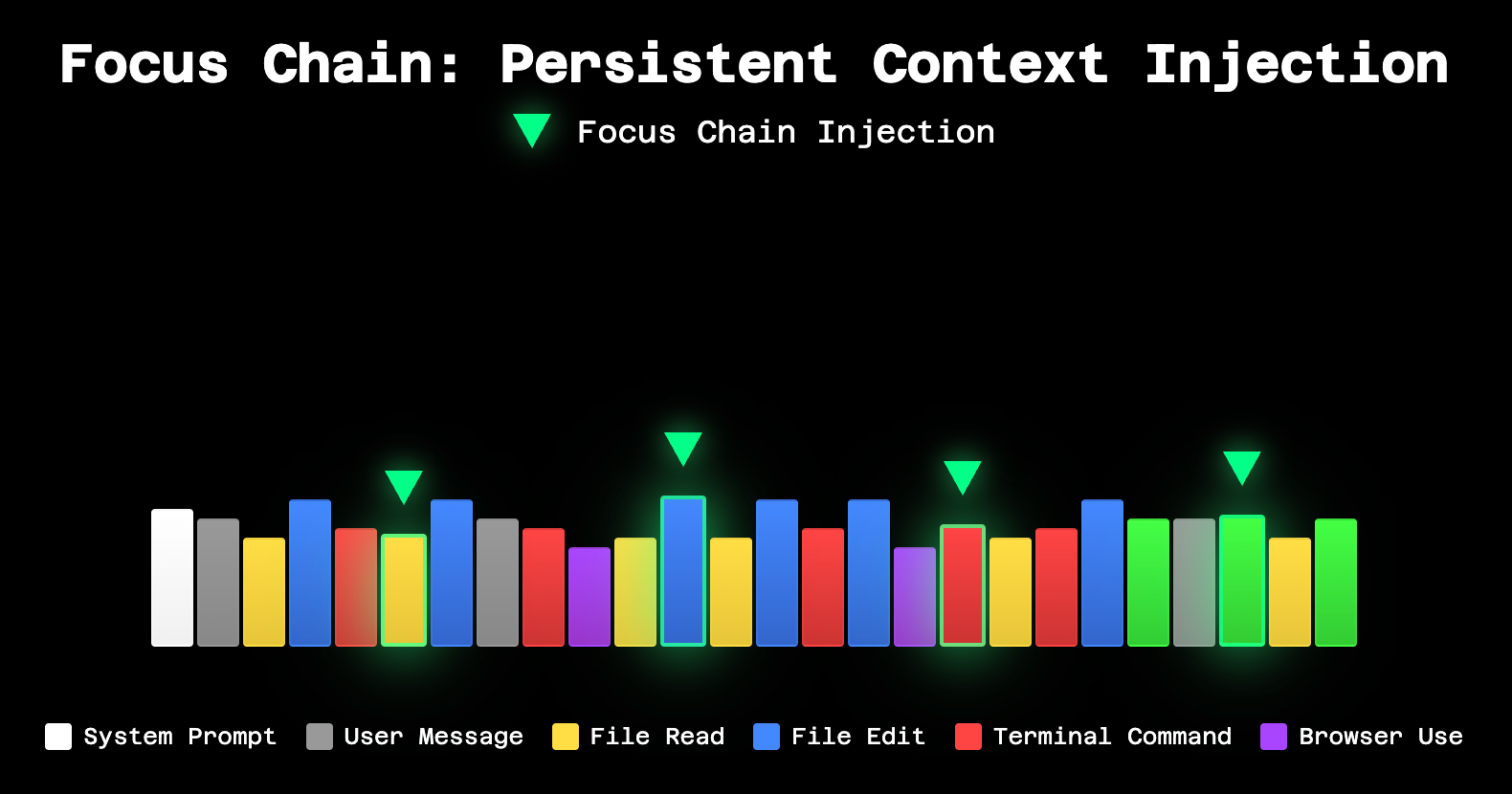

2. The Focus Chain is a persistent north star

Now, Cline has a perfect plan with which to start its task. But even with a perfect plan, agents drift, which is where the Focus Chain comes in. When enabled (it is by default in v3.25), Cline automatically generates a todo list from your task and, crucially, keeps injecting this list back into the context at regular intervals to read and update so it is not forgotten.

This isn't just a UI nicety (though it is nice!). Every six messages (by default), Cline is reminded: "Here's what you're working on. Here's what you've completed. Here's what's next." It's a persistent north star that cuts through the accumulating context noise, pulling Cline back to the main thread.

Cline updates his own checklist of todos which are injected back into context every six turns

The Focus Chain adapts as work progresses. Items get checked off, new tasks emerge, and priorities shift – but the core mission never gets lost. When the model's attention might be wandering due to context degradation, the Focus Chain yanks it back on track.

For a deep dive on why we built /deep-planning and the Focus Chain, read Focus: attention isn't enough

3. Auto Compact compresses & continues the conversation

Eventually, even with perfect planning and persistent focus, you hit the context limit. When approaching the context limit, Cline automatically creates a comprehensive summary of everything that's happened – all the technical decisions, code changes, and progress made. Then it replaces the bloated conversation history with this compressed summary and continues exactly where it left off.

With Auto Compact, a task that requires 5 million tokens of interaction can be completed using a 200k context window. The 1 million token context of Claude 4 Sonnet becomes rocket fuel – not because you can fit more pollution, but because you can go longer between compressions, maintaining higher fidelity throughout.

Combine the trio of Deep Planning, the Focus Chain, and Auto Compact to unleash a coding agent capable of solving complex problems.

Here are some other updates we made in v3.25:

- Added 200k context window support for Claude Sonnet 4 in OpenRouter and Cline providers.

- Added custom base URL option for Requesty provider.

- Fixed duplicate

attempt_completioncommand in progress checklist updates. - Fixed bug preventing announcement banner dismissal.

- Added GPT-OSS models to AWS Bedrock.

Docs!

We hope you enjoy the latest version of Cline. Let us know what you think!

-Nick

Ready to task Cline to your hardest problems? Download Cline and experience what happens when an AI agent can actually finish what it starts. Share your complex problem victories with our community on Reddit and Discord.