Commit messages aren't just for humans

Every few years, something shifts in how we write code and our workflows quietly adapt. Version control changed how we think about saving work. Code review changed how we think about readability. CI/CD changed how we think about testing. Now AI coding agents are changing something we barely think about at all: commit messages.

Your commit history has a new audience. And that audience is reading more carefully than most of your teammates ever did.

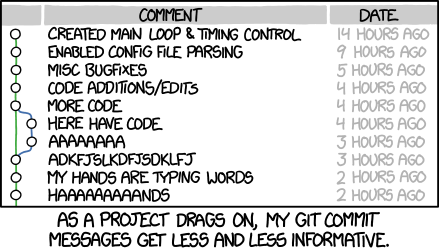

Here's what that looks like day to day. You ask Cline to "continue the refactoring approach from the last few commits." Cline pulls up your recent commit history and finds: "fix", "updates", "more changes", "final fix", "actual final fix". What exactly should it continue? What was the approach? The agent has no thread to follow, so it's left guessing intent from raw diffs alone.

Now imagine the alternative. Your commits read:

"Refactor UserService to async/await pattern", "Apply same async refactor to OrderService", "Update tests for new async behavior". Same work. But now Cline sees the pattern, the scope, and the direction. It continues your refactor across the remaining services without you having to explain anything twice.

This isn't a hypothetical. It's the difference between commits that work for you and commits that work against you in the age of agentic AI.

https://imgs.xkcd.com/comics/git_commit.png

For decades, we've written commit messages for one audience: human reviewers and future maintainers. The classic advice from Structure and Interpretation of Computer Programs applied to commits too. Write something your teammate can understand six months from now. Write something that helps during code review. Write something that explains the "why" behind the change.

That advice is still correct. But it's no longer complete.

AI coding agents now read your commit history as context. When you use Cline's `@git-changes` feature or reference a specific commit hash, your commit messages are literally being fed into AI prompts. The agent parses them, reasons about them, and uses them to understand your codebase's evolution. A vague commit message isn't just unhelpful to your teammates, it's a blind spot in your agent's understanding of your codebase.

Agents don't just passively receive context. They actively use it to make decisions. When you ask Cline to "follow the pattern we've established" or "continue this migration," it uses your recent commits for signals about what that pattern actually is. The quality of those signals directly impacts the quality of the agent's output.

What makes a commit useful to both humans and agents

The framework is straightforward: what changed, why it changed, and what it affects.

Consider the difference. "Change auth code" tells Cline something was modified but gives it no direction. "Refactor auth to JWT per security audit requirement" tells it the architectural decision, the motivation, and that similar auth patterns elsewhere should follow the same path. One commit burns tokens and requires follow-up prompting. The other lets the agent extend your reasoning on its own.

Intent matters most. "Refactor auth to use JWT" communicates a direction. "Change auth code" communicates almost nothing. When Cline encounters related code later, intent is what tells it whether to follow the same pattern or leave it alone.

The "why" matters just as much. "Migrating to JWT per security audit requirement" gives Cline a rationale it can apply consistently. Without it, the agent treats every decision as isolated and arbitrary.

Scope prevents collisions. "Update all API endpoints to return consistent error format" signals a broad, in-progress change. Cline knows to be careful when touching related code rather than introducing a conflicting pattern.

When commits carry this kind of signal, agents stop guessing and start extending your work. When they don't, you spend time correcting misinterpretations that clear commits would have prevented.

The practical cost of lazy commits

When you ask Cline to "continue this pattern" or "follow the approach we've been using," it searches your recent commits for context. Vague commits force the agent to guess at intent. This leads to more iterations, more token usage, and more corrections. You end up spending time explaining what you meant when clear commits would have communicated it upfront.

Good commits become force multipliers for AI-assisted development. They give the agent a narrative to follow, a thread of reasoning it can extend. Bad commits create friction at exactly the moment you want seamless collaboration.

This isn't about adopting Conventional Commits or writing paragraphs for every change. It's about signal density. A two-line commit with clear intent beats a one-word commit every time. You don't need novels. You need enough context that a capable but literal-minded collaborator can understand what you did and why.

A simple framework: what changed, why it changed, what it affects. You can express this in a single line for small changes or a short paragraph for larger ones. The goal isn't ceremony; it's clarity.

Your commit history is now part of your development infrastructure. It's not just an audit trail for humans, but it is context that your AI tools actively consume. Treat it accordingly.

This becomes even more powerful with Cline's Explain Changes feature, which provides inline explanations of any git diff. When your commits have clear intent, the explanations are richer; Cline can tell you not just what changed, but connect it to the reasoning in your commit messages. Vague commits produce vague explanations.

Try using Cline's git integration features to see how your commit messages translate into AI context. Reference a recent commit with `@[commit-hash]` and watch how Cline interprets what you wrote. It's a fast way to see whether your commits are helping or hurting your workflow.

Join our community on Discord or Reddit to share your experiences with AI-assisted development and how you're adapting your workflows for this new reality.